Using AI during a participatory process, part 3

/Using AI during a participatory process, part 3

Allow participants to generate images

Example platforms with this feature: All Our Ideas, Assembl, IdeaScale, UrbanistAI, deliberAIde, Your Priorities

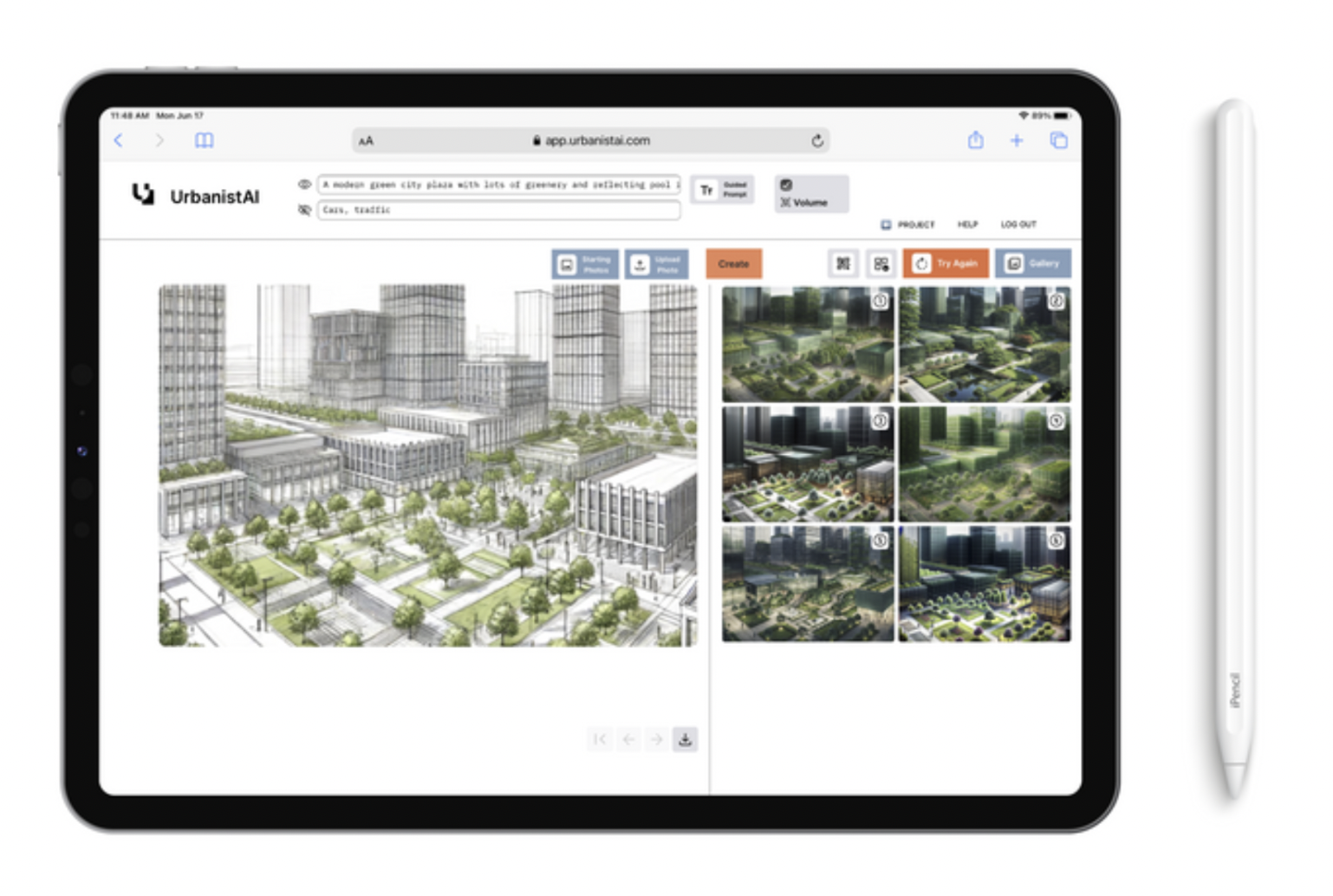

The UrbanistAI app https://site.urbanistai.com/ Image from Urbanist

LLMs are able to generate compelling images and even videos from just a short text prompt. The results have been hit-or-miss though, and getting the model to produce the desired result can still be complicated. Perhaps for this reason, image generation features are still fairly rare on digital participation platforms.

A good example of how they work can be seen on UrbanistAI. The tool uses image generation to assist participants in the ideation stage. It takes advantage of generative AI's imaginative approach to help participants visualize their dreams and desires for their place-based communities. Users can draw and annotate the images, including on an iPad app.

Allow participants to generate videos

Example platforms with this feature: None observed yet! Mainstream tools like Sora and Veo are leading the way.

This feature could become more common in participatory platforms in the near future, as the technology behind it improves and becomes more easily accessible (especially its cost). Already mainstream AI providers give their users the ability to generate video clips (Sora and Veo), extend existing clips (Adobe), and turn still photos into videos (Google Photos). Whether generative video will be useful during a participatory process (where a user can create and share videos sharing their views, for example) or after the fact (as another form of sensemaking) is to be determined.

Recommend related participatory content

Example platforms with this feature: Your Priorities, Decidim

Recommendation engines help users discover related new and related content on platforms. They're also known to extend users' 'engagement' and time spent interacting with a platform. Recommendation engines usually select the content that they show to users based on algorithms and AI.

On a digital participation platform, this 'content' might consist of other users' ideas or proposals up for vote. Promoted proposals may receive more discussion and votes. Digital participation platforms must ensure that everyone's proposals are treated equally, but recommendation engines aren't always transparent in why they select some proposals over others. Any algorithmic feature, recommendation engine or otherwise, that prioritizes one participant's content over others' should be transparent. Recommendation engines are a great place to employ open source software and AI that can be inspected by stakeholders to ensure their fairness. The platform offering recommendation engines should also be able to explain why one participant proposal gets promoted while another does not.

Moderating participant discussions

Example platforms with this feature: All Our Ideas, Fora, Stanford Online Deliberation Platform, adhocracy+, Social Pinpoint, Bang the Table EngagementHQ, Unanimous AI, Go Vocal, Your Priorities, SocietySpeaks.io, TrollWall.ai, Dembrane, Deliberation.io

Manually moderating active discussions can be burdensome on understaffed administrative teams. AI developers like Google Jigsaw have long offered free resources like the open source Perspective API that leverage AI to help moderate discussions and reduce toxicity.

Even if you use AI to help moderate discussions, we recommend keeping a close eye on what it's finding and whose contributions are being flagged. AI models are far from perfect, and unexpected language can appear in plenty of innocent contexts. You'll likely want to fine-tune the AI moderator as you see how it performs with your participants. Platforms like Decidim offer the ability to train the moderator in real-time on the content appearing on your platform, rather than a pre-trained model that might not include your community in its training data.

We also recommend human facilitators to help guide discussions in productive directions, even if you rely on AI to weed out toxic content.

Moderating spam

Example platforms with this feature: Decidim, Social Pinpoint

Although not always a problem, some platforms get deluged with spam comments. AI can help here, too, to filter out obvious spam from genuine participation. Decidim, for instance, can train its anti-spam filters on the specific spam that you've already marked for it. Unfortunately, generative AI is also making it easier for bad actors to compose authentic sounding spam for the same reasons it can help people draft better proposals. Depending on the sign-up process, though, spam may not be an issue for your program.

Envision participants' proposals with simulations

Example platforms with this feature: UrbanistAI, Urban Platform, CityGML

AI can help people simulate potential outcomes for the options they're considering. A common form of simulation used in urban planning and place-based decisionmaking is called Digital Twins. An AI model is created to emulate a real-world system, such as a city or neighborhood, as closely as possible. Once the model has been created, planners and other stakeholders can simulate the likely outcomes of a given intervention.

For example, UrbanistAI simulates policy decisions. It can analyze a dataset on a city's traffic and air quality to visualize the effect different mobility interventions might have on residents' health.

Synthetic Participation: Proceed with caution

AI's ability to synthetically represent complex systems has inspired research to use it to create synthetic agents as proxies for engaging actual people. Google Deepmind's AI lab teamed up with Stanford and other researchers to create AI agents that, the authors claim, can reliably predict what the people themselves reported after 2 hours of upfront training. The accuracy of the synthetic person-agents suffered in other situations, such as in economics games.

Future research will likely improve upon these results, but the entire direction of this work represents an existential decision point for participatory democracy. Do we want to cede our involvement to AI proxies that may (or may not) represent what we would say or do in a given situation? Even if they proved accurate, what do we lose from people not personally engaging with one another, within communities and between the elected and the governed? If decisionmakers can only consult AI avatars, will it be possible to heed the thoughts and lived experiences expressed by AI with the same care that comes when speaking with human constituents?

Should we celebrate a near future where "politicians can talk to these avatars and get to know members of the public in a really granular way"? What do we lose when public officials can skip some of the few remaining opportunities for engaging their constituents, and vice versa? Is it another dangerous step towards disenfranchised citizenry?

Much of the value in democratic systems lies in the interactions we have with one another. Participation and engagement with political systems drive benefits well beyond the specifics of a given policy outcome. AI increasingly tempts us to automate ourselves out of our involvement with an ever-expanding range of activities, with all of the associated promises and risks discussed in this report. But is automating away the active role of the people in democracy a step too far?

Complete tasks for participants with agents

Example platforms with this feature: Thinkscape, commercial AI platforms

The next phase of AI development is "Agentic AI", where the AI's role evolves from one of consultant to actually taking actions on a user's behalf. While today's AI might help you compose your to-do list, for example, an agentic AI tool would go and complete tasks on the list!

Commercial AI platforms are already rolling out agentic AI to users. Their speed and ability to accomplish tasks are not great yet, though. You can follow along with benchmark tests of AI agents on resources like OSWorld. To our knowledge, no major participation platform has introduced actual AI agents for participants yet, although some social networks have.

A demo of OpenAI's Agent mode which seeks to perform tasks for users. https://www.youtube.com/watch?v=1jn_RpbPbEc

Although not identical to today's commercial AI agents, Thinkscape has been an early leader in the use of AI agents for participation. Rather than attempt to do your shopping for you, the agents help facilitate small group discussions. They also float between the groups to pollinate ideas. And they can bubble up what's being discussed in many small groups to the admin level. By employing AI agents in these ways, Thinkscape is able to strike a balance between giving participants ample opportunity to speak and be heard in small groups, and giving process hosts the ability to scale conversations to include many more people.

Agentic AI generally introduces new risks beyond those of today's generative AI. It's one thing for an AI model to get something completely wrong while providing information to a user. It's another thing if it acts on that wrong information, sending a message to a person, buying something, or deleting data based on that erroneous understanding.

Another concern with agentic AI is that in contexts with low digital literacy, AI agents could begin supplanting human participation by acting on their behalf without sufficient involvement from participants.

Malicious actors are also likely to adapt to and attempt to scam people using AI agents. Proceed with great caution.

Previous: Using AI during a participatory process, part 2

Next: Using AI after a participatory process finishes