Using AI after a participatory process finishes

/Using AI after a participatory process finishes

Help make sense of it all

Example platforms with this feature: Sensemaker, Assembl

Sensemaking helps participants and admins alike understand the results of a participatory process. The goal is to communicate how the process went and share the results in a way that helps participants feel seen, heard, and like their time was well used.

So far, we've observed that many AI features on participation platforms have been introduced in this stage, potentially because it's seen as less risky than using AI during the core participatory activities. Unfortunately, many of the AI-powered sensemaking features on the market are only visible to the admins hosting the participatory process, not the participants themselves. This is a missed opportunity to show people what they helped make happen. Fortunately, there are exceptions.

A graphic novel produced by Assembl. Graphic taken from Assembl

For example, Assembl distills wide-ranging digital debates down and represents them in visually creative formats like mindmaps, videos, and even a comic strip summary. The platform helps tell the story of what happened in the group's deliberation process in highly legible ways that everyone can understand.

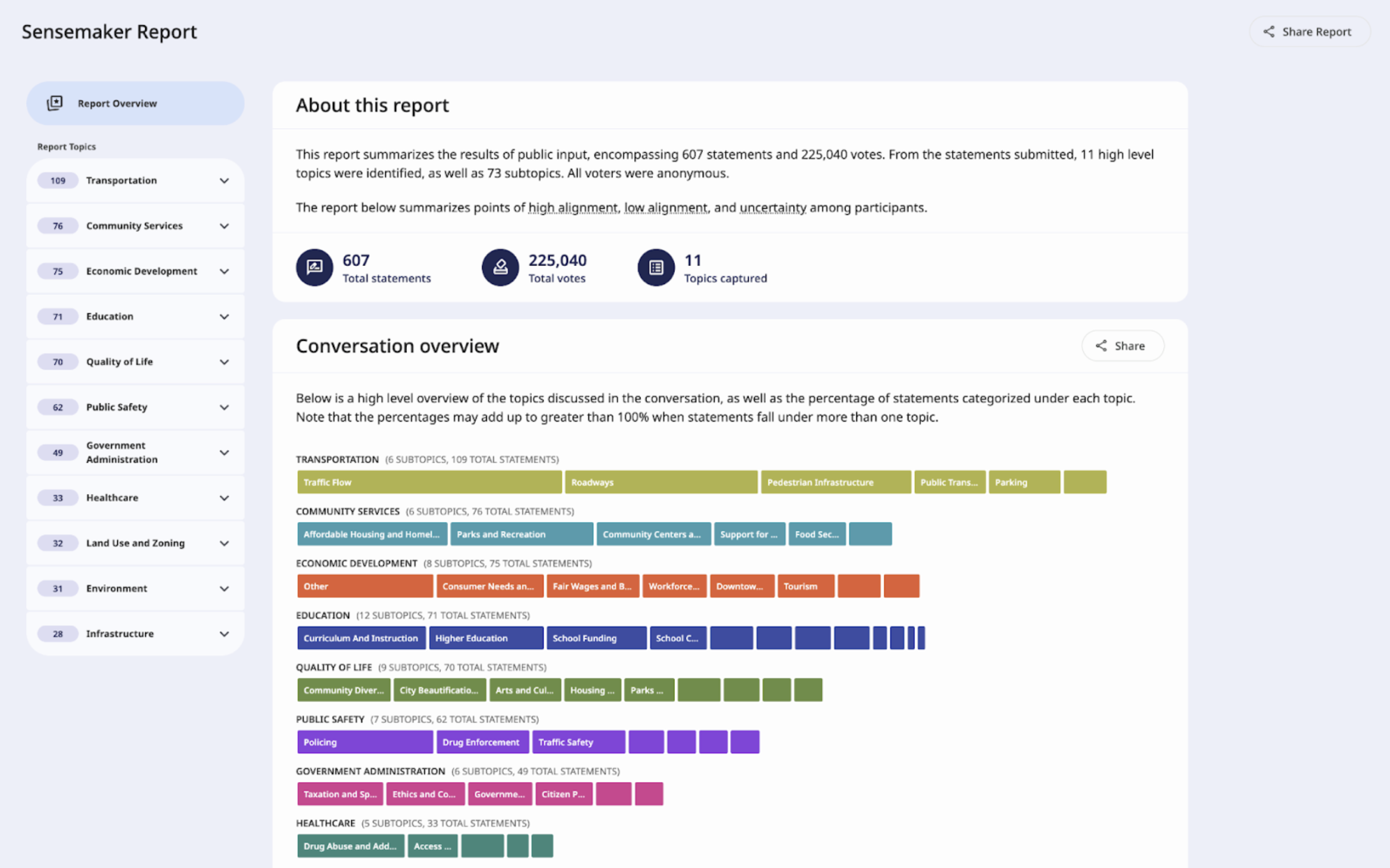

Google's Jigsaw team also launched its own free product, Sensemaker, to provide this service. The open source tool is under active development, and can be used with a variety of other participation platforms.

Compose written summaries of the process

Example platforms with this feature: Assembl, CartoDEBAT, Insights, Fora, Go Vocal, Bang the Table EngagementHQ, Polco, Panoramic, Konveio, parlament.fyi

In addition to summarizing aggregate contributions, AI can and is being used to help summarize entire participation processes. That way participants and hosts alike can get a digestible version of a voluminous discussion engaging tens of thousands of participants.

As one example, a 2024 study published in Science by Google DeepMind and Stanford found that AI generated more agreeable summaries of discussions than humans, while still representing the minority views that had been expressed.

Generative AI is known to miss things, though. As in the Nesta report, we agree with the UK public that sensitive or local engagements should receive a greater degree of human review to ensure the model doesn't miss entire sections of engagement. This approach, paired with emerging enhancements to limit hallucinations (like RAG) will hopefully reduce this risk in the near future.

Collect and display analytics

Example platforms with this feature: Your Priorities, Fora, 76engage, Stanford Online Deliberation Platform, CartoDEBAT, Loomio, Empurrando Juntas, Bpart, Ethelo, adhocracy+, ConsultVox, Converlens, Civocracy, Delib Suite, Go Vocal, coUrbanize, IdeaScale, Cocoriko, Efalia Engage (formerly Fluicity), Bang the Table EngagementHQ, Place Speak, DemocraciaOS, Polco, Crowdsmart, Unanimous AI, Efalia Engage (Fluicity), Talk to the City, Delib Citizen Space, PublicInput, Konveio, deliberAIde.

Process hosts are using AI to process and analyze the data coming out of the participation program. This may include consulting formal analytics programs, such as those offered by digital participation platforms, as well as ad hoc analysis conducted with general purpose AI models. For the latter, a process host can upload a set of data export files to a model to provide context, and then ask specific questions of the model.

Some participation platforms are also integrating AI into their analytics products. For example, a process host can easily "ask" their analytics package where participants are dropping off during the engagement flow rather than build and tag a traditional conversion funnel. DeliberAIde and other platforms promise to "measure deliberation quality".

Analytics is another area where relying solely on LLMs could be a mistake, given their tendency to hallucinate and fail basic math tasks. Newer products are chaining together several AI tools (known as 'tool chaining') rather than rely solely on LLMs. For example, they might use the LLM to help the user brainstorm the question they want to ask and translate it into the steps it will need to take, and then call in another tool, such as a calculator, to execute the query and return a trustworthy answer.

Data visualization features help make sense of the analytics. Beyond just analyzing the data, visualizing it is a powerful way participation hosts can share back what took place with participants. Data visualization has been around for years, but AI has made it far simpler, faster, and cheaper to create a variety of compelling visuals that tell the story of the engagement.

For example, Google's Sensemaker platform can dynamically build a report webpage visually presenting the data on the discussions that took place:

https://github.com/Jigsaw-Code/sensemaking-tools/ Image taken from Jigsaw

And more!

As you can see, there's endless room for experimentation here. Some other ideas we've seen come up, but haven't seen enough examples of yet, include:

Contribution tracking: Using AI to see how participants' own contributions showed up in the end results. This is nearly impossible to do at scale without AI, and could be a powerful reinforcement of the benefits of participating.

Playback: Automate an animated playback of the entire participatory process that people can view. Or use AI to show participants the highlights and key moments.

Smart notifications: What if the AI could learn what we care about, and only notify us then?

Driving post-process ongoing engagement with participants. Staying in touch with participants after a process is vital, yet a common failure of today's programs. AI could help admins do a better job of this, while also tailoring the communications to the topics and ideas people demonstrated interest in.

| Stage | Participants | Hosts |

|---|---|---|

| Before (planning) |

|

|

| During (implementation) |

|

|

| After (sensemaking, feedback, and ongoing engagement) |

|

|

↔ Swipe to see more