Protect data privacy

/Protect data privacy

Data privacy is particularly critical on participation platforms, as they often handle participants' personal and sensitive information. Therefore it's critical that you select platforms that comply with the highest standards of data protection. They should offer clear mechanisms for getting users' consent, facilitating anonymity if your program calls for it, and securely storing data. Responsible data governance must be a foundational principle in the use of digital tools for civic engagement.

We've outlined some questions you can ask platform providers in the How to evaluate a platform section. Most of all, find out where your participants' information goes and who, if anyone, it's shared with. A holistic way to answer this question is to take a look at the platform provider's governance and business models. Do they align with the responsible treatment of your participants' data?

A legal advisor should review any applicable laws governing personal data and privacy in your jurisdiction. Those laws may be at the city, state, national, and/or international levels.

When considering procuring or using a digital participation platform, first review its privacy policy. Many institutions require a lawyer to approve the policy before procuring a digital platform. If that is the case, build extra time into the process.

Globally, 79% of countries have passed or drafted data protection and privacy legislation. The United Nations Conference on Trade and Development maintains a helpful database of these laws, organized by country. The European Union's landmark General Data Protection Regulation (GDPR) includes principles governing data use. These are worth reviewing even if you are located outside of the EU, as they represent an emerging legal consensus. Other major data protection frameworks include:

Brazil's General Data Protection Law

California's Consumer Privacy Act

China's Personal Information Protection Law

South Africa's Protection of Personal Information Act

India's Digital Personal Data Protection Act

Canada's Personal Information Protection and Electronic Documents Act

the EU's AI Act, which reinforces data protection principles

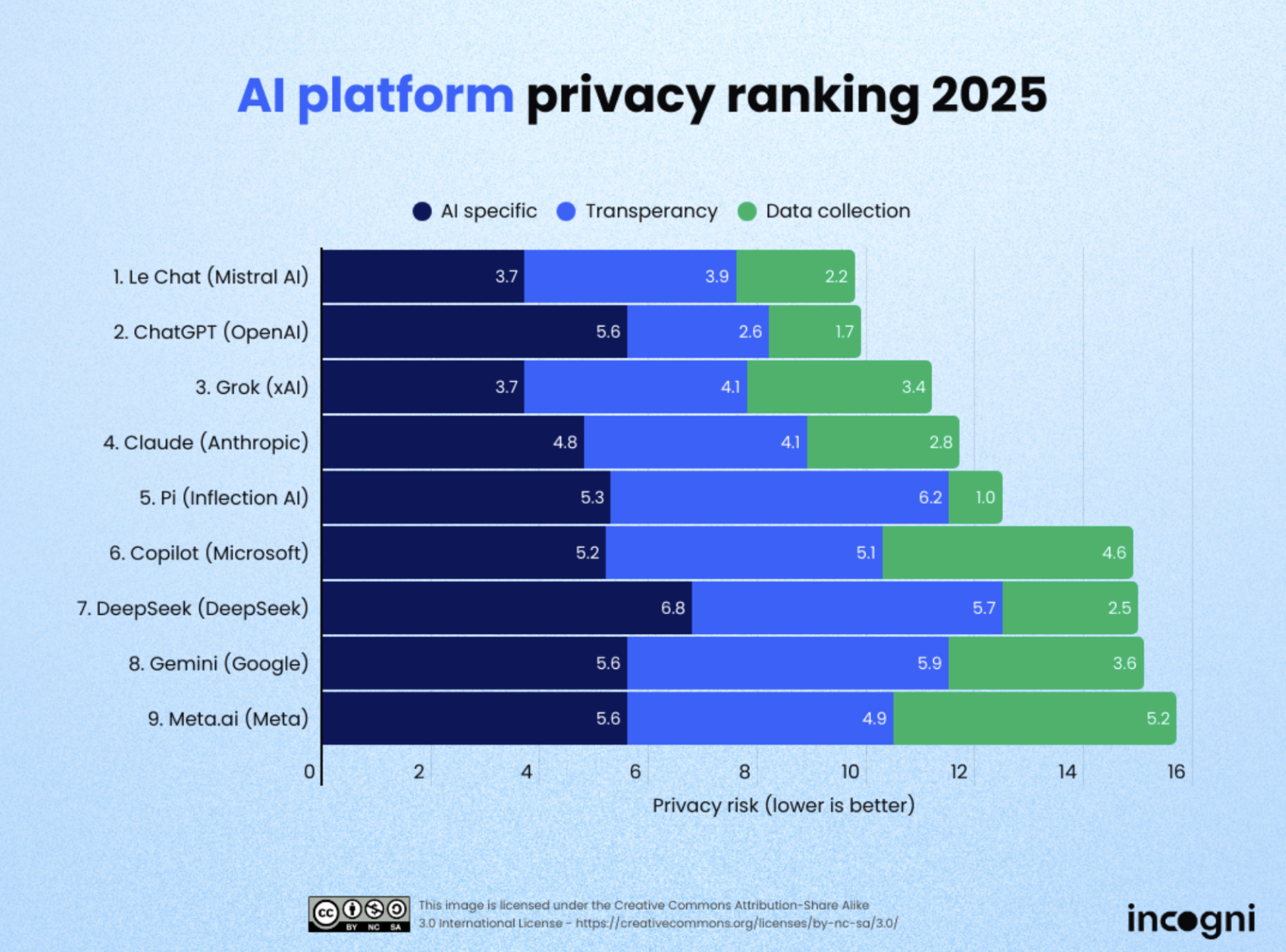

The best (and worst) AI models for user privacy

Incogni ranked nine of the major chatbots in a June 2025 study focused on their privacy policies. They looked at specific performance on privacy priorities like whether they train on users' prompts, their transparency on which data they collect, users' ability to opt out, and sharing information with third parties.

Graphic taken from Incogni.

The results? As of July 2025, the best privacy actors, according to Incogni, are:

Mistral AI’s Le Chat, which collects minimal data and is transparent about data usage.

OpenAI’s ChatGPT, which provides a clear opt-out option and is transparent.

xAI’s Grok, which has solid opt‑out options and clear disclosure, but has other issues.

Anthropic's Claude, which doesn't train on user input, so you don't need to adjust it, and provides good to high transparency on data usage.

The bottom 5 models for privacy were:

Pi 's Infection AI

Microsoft's Copilot

DeepSeek

Google's Gemini

Meta's Meta.ai

Meta.ai scored last in part because it shares users' prompts with third parties without allowing them to opt-out. ARIJ's AI index includes links to many of the AI models' data and privacy policies in one place if you'd like to explore in more detail.

Another report from 2025, by the Future of Life Institute, gives Anthropic, OpenAI, and Google grades from C+ to C-, respectively. Meta scored a 'D' and DeepSeek an 'F' (the lowest score).

It's also important to consider the regulatory environment any given AI company operates in, as they're often legally required to comply with national security laws. Growing awareness of digital rights has created a market for more privacy-friendly products. In generative AI, that includes alternatives like Lumo, a privacy-centric chatbot from Proton.

Next: Look for sustainability

Previous: Take contracting issues into account